Publications

On Forgetting and Stability of Score-based Generative Models

Understanding the stability and long-time behavior of generative models is a fundamental problem in modern machine learning. This paper provides quantitative bounds on the sampling error of score-based generative models by leveraging stability and forgetting properties of the Markov chain associated with the reverse-time dynamics. Under weak assumptions, we identify two structural conditions ensuring propagation control of initialization and discretization errors of the backward process: a Lyapunov drift condition and a Doeblin-type minorization condition. A practical consequence is quantitative stability of the sampling procedure, as the reverse diffusion dynamics induces a contraction mechanism along the sampling trajectory. Overall, the results clarify the role of stochastic dynamics in score-based models and provide a principled framework to analyze error propagation in such approaches.

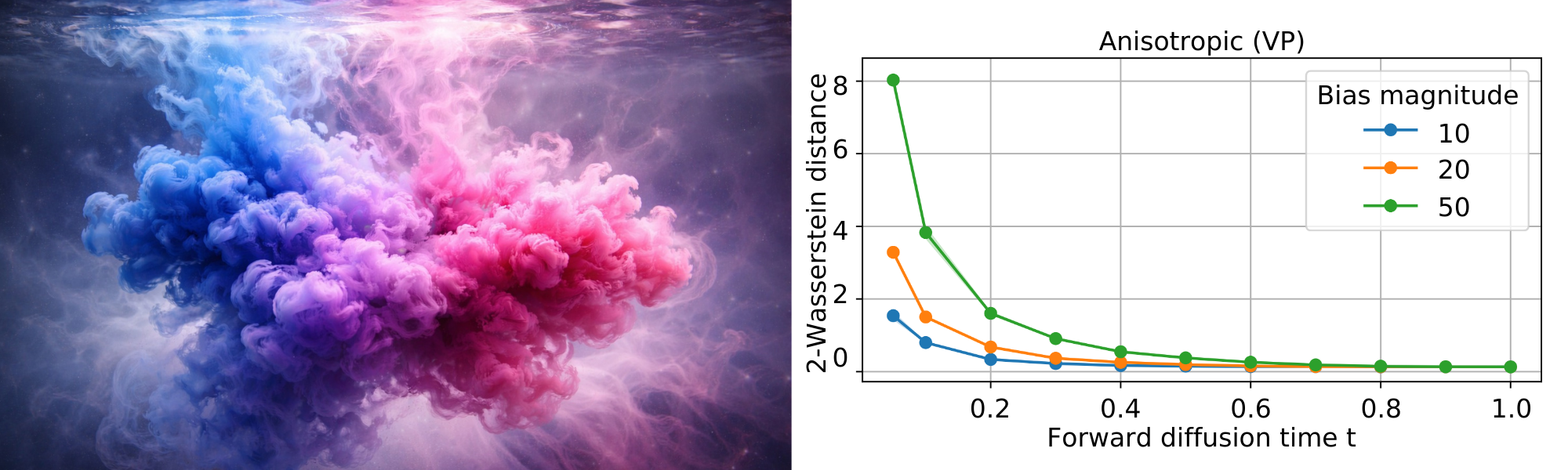

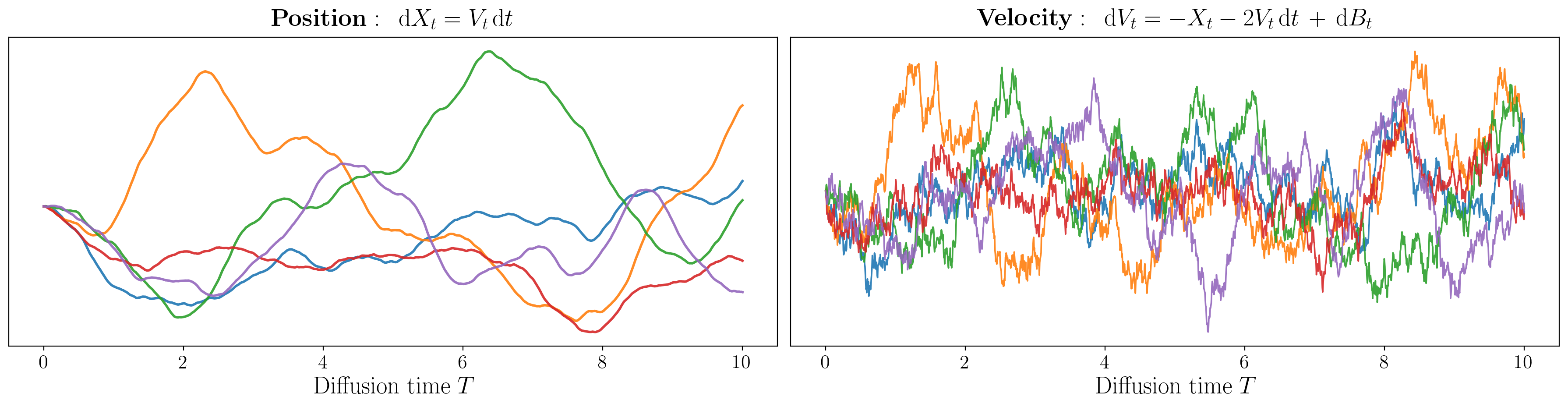

Wasserstein Convergence of Critically Damped Langevin Diffusions

We study the convergence guarantees of Critically Damped Langevin Diffusions (CLDs) for score-based generative modeling and provide, to the best of our knowledge, the first Wasserstein-2 upper bound for kinetic SGMs by analyzing the Lipschitz regularity of a modified score function. Our study also motivates the introduction of a noise–control hyperparameter that restores ellipticity by injecting noise across the entire state space. This choice has a measurable impact on generation quality in synthetic datasets.

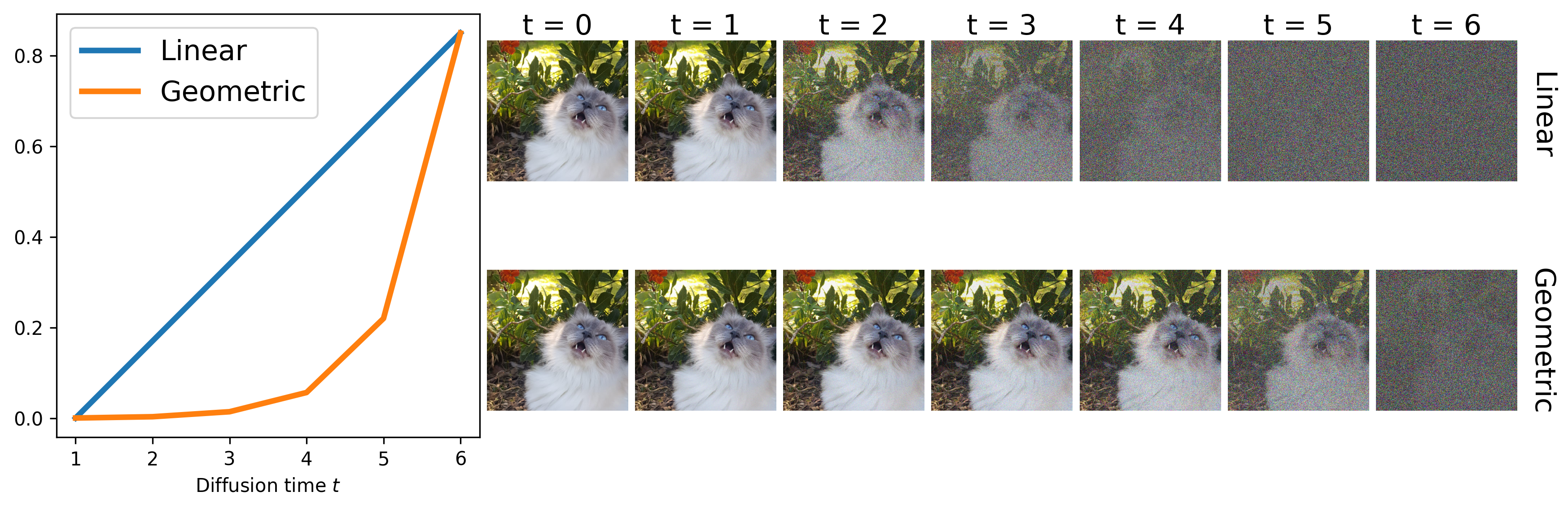

An Analysis of the Noise Schedule for Score-based Generative Models

We study how the choice of the noise schedule impacts the generation error in Score-based Generative Models (SGMs). We derive explicit upper bounds for both the Kullback–Leibler divergence and the Wasserstein-2 distance between the generated and target distributions, highlighting their dependence on model hyperparameters and regularity of the data distribution. Theoretical findings are supported by numerical experiments on synthetic and real datasets.

You can also find my articles on my Google Scholar profile.